Requiring unit tests for code review isn’t the greatest motivation for people to write unit tests. Sure, it tries to make sure unit tests are written, but it doesn’t give the reason to write them.

In general, I like to ask “why?” to figure out why things are done, why they should be done, what are other ways to achieve the same goal?

I don’t remembering ever getting an explanation on why there was a requirement. I suppose the obvious answer was that we now have a testing infrastructure, and we don’t have many unit tests, so you need to write them. But still, that’s not a great motivation for writing tests.

So, why should you write unit tests?

- Make sure your code does what you want it to do

- Speed up development and optimizations/refactoring

- Create better interfaces and functionality

- Get other people to use your contribution

- Make sure nobody else breaks your feature

This isn’t an exhaustive list, but these are things I’ve noticed from my own experiences.

Make sure your code does what you want it to do. The first reason is somewhat obvious, but it’s effect isn’t. I believe one big benefit is that it lets you change how you write code. Specifically, it speeds up your development in that you don’t have to completely think through what you’re doing. That might not sound too great, so I’ll try to give an example.

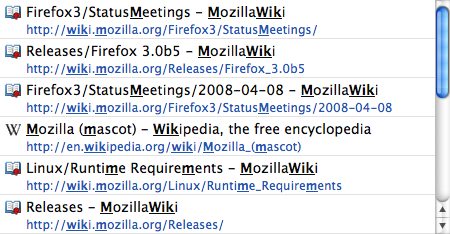

When working on word boundary matching for the AwesomeBar, I had automated unit tests set up with a set of pages and a set of searches, and it made sure searches matched on word boundaries (like spaces, dots, slashes and not after other characters). I needed to write a string processing utility that matched on word boundaries that would work for standard word boundaries as well as CamelCase and not break search for ideograph languages like Chinese.

I was never one for too much theory, so instead of sitting around and explicitly drawing out all the boundary conditions, I made simple code changes, recompiled, checked if the tests passed. Instead of sitting around for 10 minutes thinking deeply about how to deal with multiple word boundaries in a row, I made a 1 second change and ran the test to see that it passed.

The point there is that you can spend less time trying to reason about if the code will do what you want and just let the computer automatically do the checking. You already specified what the code should do with your test, and the computer can quickly make sure that they pass. This also relates to speeding up development (or perhaps avoiding my impatientness) because I don’t need to go through the long process of firing up a debug build to make sure my manually typed search terms find the expected pages — I don’t even need to make sure I have the right profile with the right set of test pages.

Speed up development and optimizations/refactoring. I mentioned speeding up optimizations and refactoring, but the former is basically a special case of the latter. Both tasks are making changes to the code without changing functionality. With automated unit tests set up, you can be sure that your refactoring changes don’t break expected functionality. For example, as I was optimizing the AwesomeBar to go faster and use less CPU cycles, I relied on the unit tests to make sure searches still provided the same list of urls.

In fact, one of my unit tests caught a bug in my first optimization because I forgot to check for javascript: URIs. The optimization reuses previous search results if the new search begins with the same text as the previous one, but we also do some special processing for javascript: URIs. Namely, we don’t show javascript: results unless you explicitly type out “javascript:” at the beginning of the query. So if you were searching for “javascript” and then typed “javascript:”, the optimization wouldn’t have shown any javascript: URIs because the previous result couldn’t have contained javascript: results.

Create better interfaces and functionality. Good APIs are fun to test. I enjoy writing AwesomeBar tests because I just need to provide some search words and a set of expected results. Simple. But you can also have better functionality because the process of writing tests makes you a user of the API. You start thinking about “what else” or “how could this be better.”

For a might-not-make-it-to-Firefox-3 feature of being able to restrict results to non-bookmarked pages or forcing matches on the url (by default), I wasn’t sure at first how things should interact if you have multiple “restricts” or “matches”. By writing the unit tests, I was able to easily look at all possible combinations such as “must match url and must match title”. Because I was writing the test, I noticed this potential issue and got it clarified; otherwise, it might have remained a not-well defined feature.

Get other people to use your contribution. I like to learn by example, so if I want to use something that I don’t know how to use, I search for existing uses of it in the codebase. A good description of a class/method is nice and all in the interface description, but seeing it actually used is much more helpful for me. Ideally test cases are written to test representative usages of a feature, so someone looking at the test code should be able to reason about how they should use the interface.

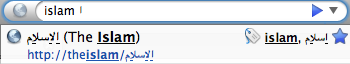

I’ve written up a couple unit tests for the PluralForm module, and they serve as additional examples of how to use PluralForm. I’ve written up some PluralForm documentation [developer.mozilla.org] on how to use it in Firefox and extensions, but without the unit tests, someone searching for PluralForm would have to rely on the current usage in the Download Manager. And relying on that would make things more confusing as one would have to look through a lot of unrelated-to-PluralForm download manager code.

Make sure nobody breaks your feature. You just worked really hard on adding a new feature. You don’t want it broken. I suppose an alternate motivation is that the blame goes to whoever breaks your test. If you didn’t have the test, potentially you would be stuck fixing it some weeks/months later. Also, with unit tests, it’s easier to see how the code is being used, so the person breaking the test should be able to fix it faster.

Tests aren’t just “for the future.” They help fix things now and help you go faster. And if you’re doing a lot of development and frequently touching the same piece of code such as having a huge stack of patches for a single file, unit tests help make sure patches can be applied without other patches, and if not, you know it wasn’t going to be safe to “cherry pick” a patch out of the stack and check it in.

I’m sure there are other benefits of writing automated unit tests, but I think this is a good starting list of motivations. Hopefully better than “why didn’t you write a test?!” 😉